Travel Buddy

It’ll help you leave yesterday behind and find what’s ahead :)

Using the micro bit, I want to create a portable electronic travel device that uses an image recognition API to detect objects and provide tactile or auditory feedback by vibrating or making beeping sounds more rapidly as the user approaches the object. This is meant to help visually impaired people avoid obstacles to travel safely and also helps them identify objects in their surroundings.

Target Problem

Research

Independent travel is a source of anxiety and can be quite challenging for many visually impaired people. People who are blind or visually impaired make choices when it comes to travelling. There are a couple of aids that they could use such as using a human guide, which involves holding onto someone’s arm; using a cane to avoid obstacles and elevation changes; using a dog guide, or using no additional aid. Even with these resources, travelling can be a difficult task and some people tend to avoid it altogether. And so, with my design, I hope to give users another method to assist with independent travel in hopes that it can allow visually impaired people to be autonomous.

Design Criteria

Musts:

Tactile and auditory cues must be easily detectable and differentiable

Notify the user of upcoming obstacles and help the user locate various objects

Shoulds:

The device should be easy for the user to use and understand

The device should be affordable and easily accessible for the desired population

Have buttons to control the volume based on the user's preference

Coulds:

Use motion detection to locate how far objects are and have auditory cues to hint at incoming entities.

Auditory and Haptic Perception

For this design, it is important to have auditory and haptic perceptions rather than visual feedback as it is meant to be a cross-sensory method of detecting objects without visual perception. The auditory cues consist of changes in tempo, pitch and loudness from the microbit and help the user be aware of the surroundings. The haptic feedback of the device will add sensory cues (vibrations) along with the auditory alerts the user is in a loud place and it is hard to hear the audio.

Success or in need of Improvements?

I will know if my design is successful or in need of improvement based on user feedback and a review of the design. I believe that once I’m able to make a fully functional prototype, it can be given to people who are visually impaired to do usability testing. Doing usability testing is important because it allows me to understand real users would interact with the device and see whether it is helpful. This product also has a very specific target audience, so getting feedback from the users that it is designed for is crucial for making a good functional design. From the reviews and feedback, I would implement changes to create a better version of the product.

Teachable Machine

To get started I had to first find an image recognition API that I can implement with the microbit and use to identify objects. From looking at various different ones, I came along Teachable Machine. This site allowed me to create multiple classes to which I could assign images, so I made different classes of objects that we see in daily life such as stop signs, light poles, walking signs, etc. For this prototype, I added only a few objects which I could test out, however, the final iteration would be able to detect a whole variety of objects that the user could come across.

Then, using a reference that I found, I made the p5.js script that combines the Teachable Machines code to identify items. I tested this out by connecting the webcam to my laptop and placing an object in front of the cam to see if it would detect and identify it. From testing this out, I realized that having multiple pictures for a class made identifying the object more accurate as multiple images captured different views of the object.

P5 Script

After having the script and image recognition working, I went on to coding on the microbit editor. For this code, I added if statements in a forever loop that would make different sounds when an object is detected. Initially, I wanted to have the microbit making a beeping sound along with audio voicing out what the object is. However, the microbit doesn’t really do a good job at voicing out the word so I opted for having different melodies for each object instead. This worked for sake of having a rough prototype as I didn’t have too many objects, though eventually, I would have to come up with a better solution. I would also add haptic feedback in the future which would require a vibration motor that I wasn’t able to get for this project. Instead, I bought a webcam which was a more important component for this design as it’s needed for object detection.

Final Prototype

In the end, I ended up with a partially complete prototype which is still helpful in envisioning what I wanted to do. My prototype was able to detect a small selection of objects that I added to the Teachable Machine and provided auditory feedback when these objects were detected. In the future, I would want to get all the components to make this a fully functional prototype.

Microbit Block Code

Client Meeting

From my presentation, I got plenty of useful feedback and suggestions that I’ve included as a part of the client meeting. These include:

Using object recognition in a more controlled environment, so that it is more reliable for blind users. An example of a controlled environment would be a grocery store, where I can use object recognition to detect different foods.

Having different audio cues/labels depending on the accuracy of the detection. So, taking a flower pot as an example, it could have different audio labels depending on how confident the object detection is. This differentiation can be a number that indicates the percentage of accuracy of the object detection.

Take-Aways

From these suggestions, I was able to understand and identify the flaws in my design. So, I’ve decided to make some changes to my idea to make my prototype more reliable for visually impaired people. Originally, I wanted to have a portable travel device that uses an image recognition API to detect objects to help blind people identify and avoid obstacles to travel safely. However, the outside environment is very complex and so focusing on a specific task like grocery shopping would be a more reliable prototype as it’ll be more accurate and won't pose safety issues. Grocery shopping is also an aspect of independent travel and a task that can be very difficult for visually impaired people. Thus, for this next iteration, my target problem will be making a device that helps visually impaired people identify foods and items in a grocery store to shop independently.

Another suggestion I got from the presentation having different audio cues that correspond to the accuracy of the detection. I hadn’t even thought about this in my first design, though it would be a really helpful feature because image recognition isn’t perfect and so it's important for the user to know how confident the API is of its detection. To implement this for the prototype, I would want to include an audio label voicing out the percentage of the detection accuracy, so it would first say the item name, followed by a percentage. I would also need to use an image recognition API that states the accuracy percentage as Teachable Machine, which I previously used, doesn’t have this feature.

Second Iteration

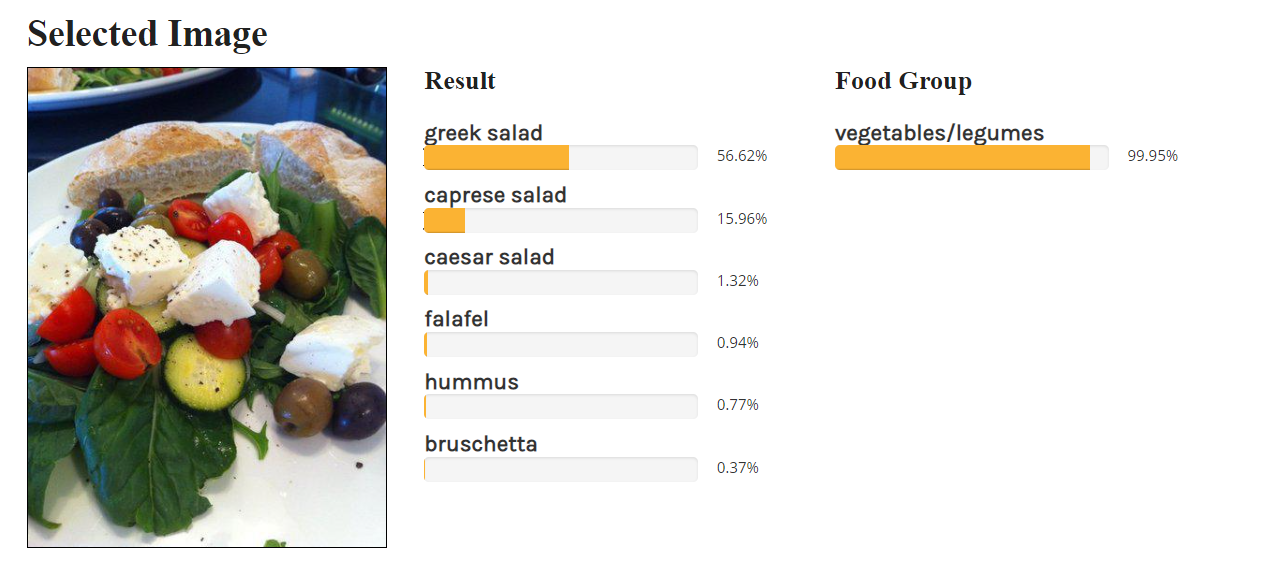

To Begin, I started looking for different Food Recognition APIs that I could use that would be able to detect different items and also offer the accuracy percentage. That's when I came across LogMeal API services, which had Ingredients information recognition. This feature was able to detect whole foods but also extract the list of ingredients present in the dish as well as their standard quantities. I tested this out with images of foods and dishes and it was able to identify the dish, along with the food group, to which the dish belonged.

I was really happy with the output of this and wanted to implement it in my design. To do so, I installed Postman which is an API platform for building and using APIs. This program helps break down each step of the API by having separate folders for each category and this helped me understand the code better too. Unfortunately, I couldn’t find any tutorials on how to implement and use this API so I wasn’t able to figure out why the code wasn’t working when I run it. After trying to troubleshoot, I still couldn’t get it to work, mainly because I didn’t know how to get the image input from my webcam. I tried emailing the API creators, though they haven't gotten back to me yet and I was short on time for completing this assignment.

As a substitute, for now, I have used Teachable Machine again, making the changes so that it is suitable for this new idea. These changes included having different classes as various food items. Unlike LogMeal API, the teachable machine was limited to only learning to detect whole foods rather than dishes and foods with multiple items. In the future, I would want to implement the LogMeal API with the microbit to fulfil my original design goal. Personally, I did find trying to achieve this goal hard as I don't have advanced programming skills and I would probably need assistance to work with the API as I want to.